The number of startups entering the AI chip market is growing considerably. Although there are dozens of startups developing specialized chips for machine learning, some startups really stand out. One such startup is Groq. And they stand out not only for their technology but also for the way it came into being.

In May 2016 at the Google I/O conference, Google introduced the world to its Tensor Processing Unit (TPU), which was being used internally in its data centers for more than a year. The chips were designed to specifically run Google’s AI stack. Google claims the TPU delivered “an order of magnitude better-optimized performance per watt for machine learning” than competing products and had “fewer transistors per operation”, thus giving them the ability to “squeeze more operations per second into the silicon”. The Cloud TPU v2 Pod (cluster of TPUs) is able to perform at 11.5 petaflops and comes with 4TB of memory. The 512-core Pod slice with 1-year commit has a discounted price of $2.1M per year, thus, it’s not for the faint in heart.

Source: Google Blog

In March 2017, billionaire VC, early Facebook employee, and Golden State Warriors minority owner Chamath Palihapitiya spoke on the morning news show Squawk Box and said that two years prior (2015), he heard Google mention an AI chip during an earnings call. Immediately thereafter, he sprung into action and went on the hunt to find the engineers that built the Google TPU. After a year and a half, he wooed away 8 of the 10 engineers that built the Google TPU, invested $10M in the new talent, and Groq was born.

Timeline

- Several years before 2015, Google begins R&D on a new AI chip

- 2015: Google begins to use its TPU internally to power many services

- 2015: Chamath goes on the hunt for Google’s TPU creators

- 2016: Google announces the TPU and makes it available to public consumption

- 2016: Groq is born

- 2017: After 1 and 1/2 years, Chamath woos away 8 of the 10 original TPU builders

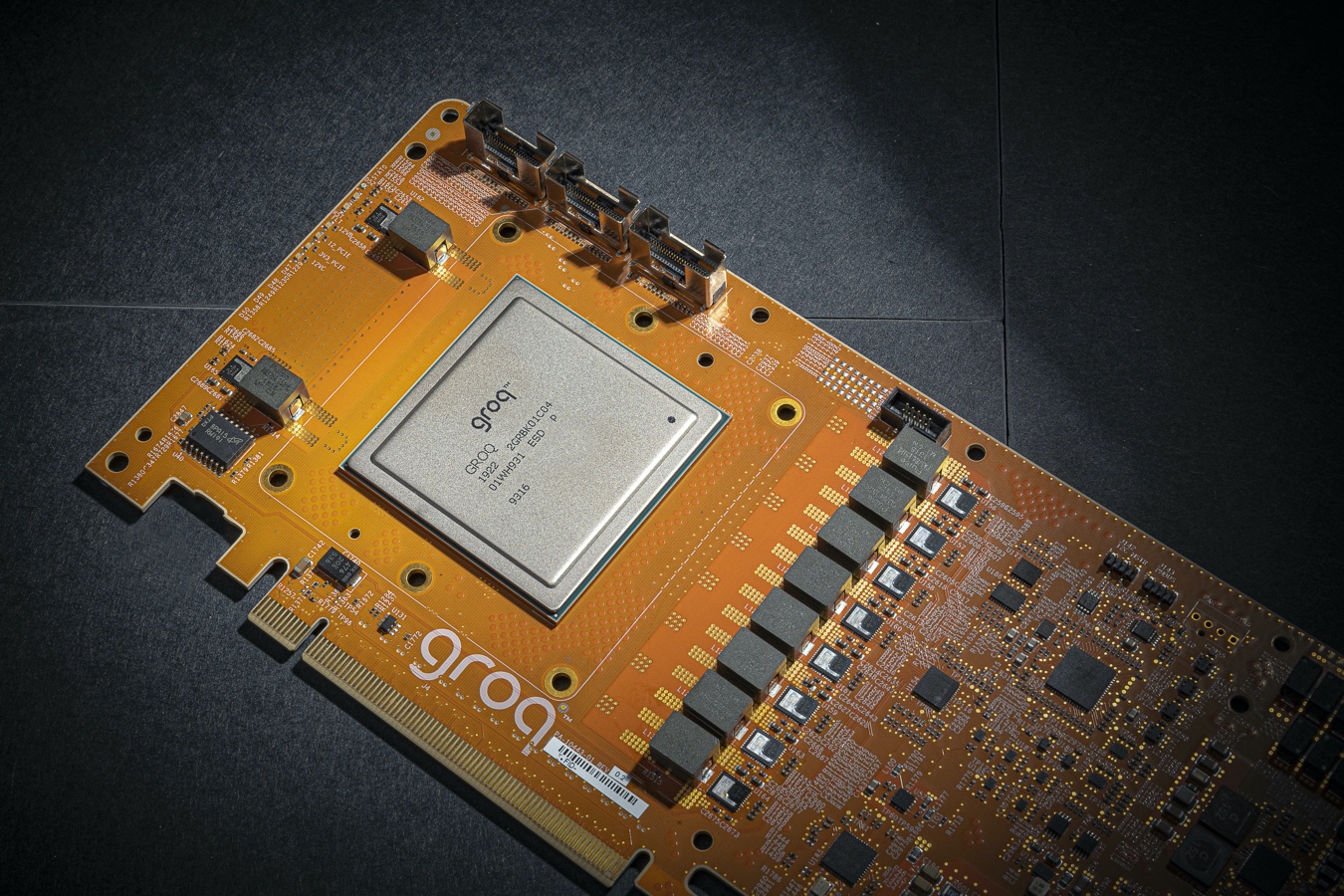

Groq is an AI startup that has developed a chip that “is revolutionary on every dimension” according to Chamath. The Tensor Streaming Processor (TSP) architecture “is capable of 1 PetaOp/s performance on a single chip implementation” which equates to “one quadrillion operations per second” and 250 trillion FLOPS (floating-point operations). In comparison, the latest and greatest from AWS is the Inf1 inference chip which performs at 128 teraOps (INT8) / 64 teraOps (FP16, BF16). Hence, the Groq chip is a performance beast without equal, for the time being.

Source: Groq PRNewswire

The startup is focused on inference, not training, and their chip is “faster” than competing products in the inference market. The “software-defined hardware” has allowed the engineering team to moved some of the tasks usually performed by the chip (hardware) to software (compiler), thus freeing up the chip to perform more critical functions.

Groq has been laser-focused on simplicity, creating a powerful architecture without all the bells and whistles typically associated with chips like the Nvidia GPU. The startup has come to the conclusion that “standard computing architectures are crowded with hardware features and elements that offer no advantage to inference performance” thus, creating an inference bottleneck. In other words, adding complexity to the chip in the form of more “cores, multiple threads, on-chip networks, and complicated control circuitry” is the enemy.

Background

- Company: Groq

- Founded: 2016

- HQ: Mountain View, CA

- Raised: $62.3M

- # of Employees: 73

- Founders: Jonathan Ross (CEO) and Doug Wightman

- Product: AI chip for inference