The Nvidia H100 sparked the greatest compute land grab in history. While Nvidia is famously tight-lipped about exact shipment volumes, industry trackers provide a staggering picture of the supply hitting the market.

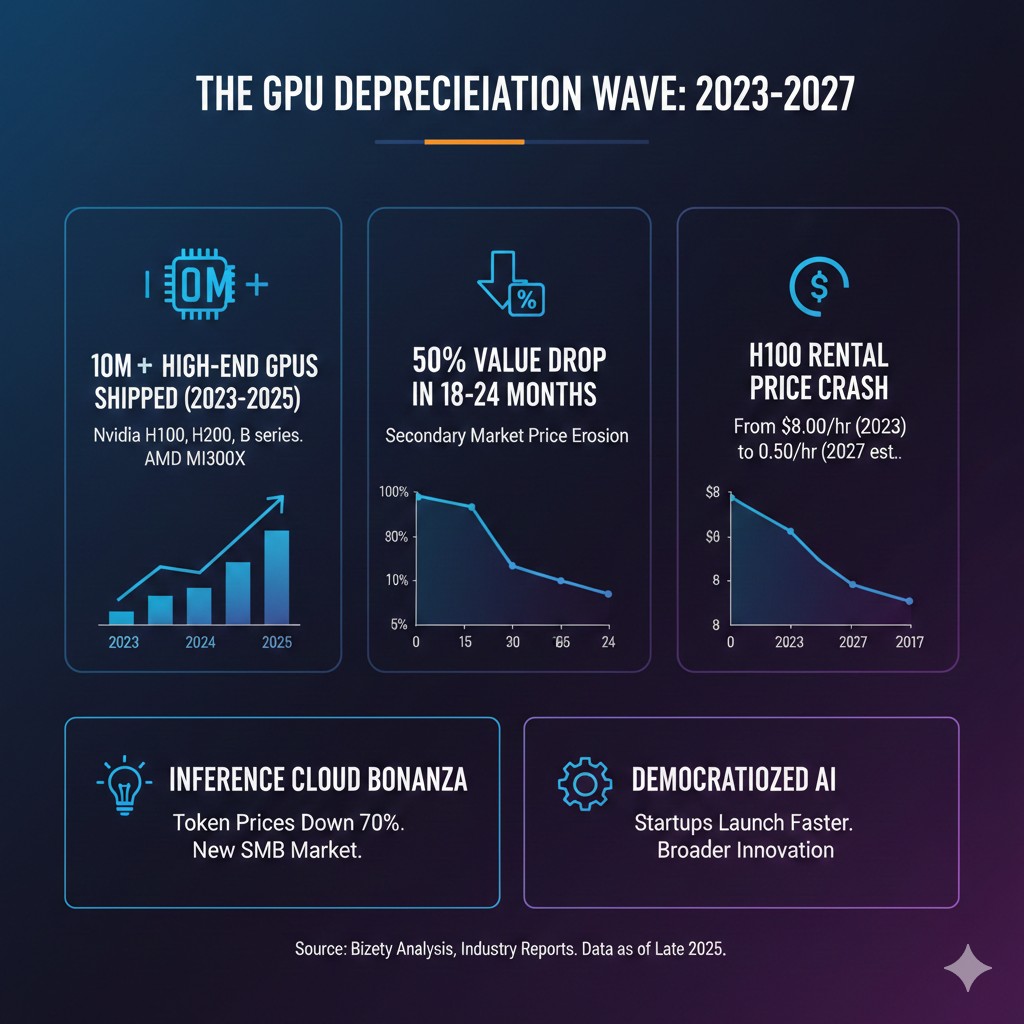

In late 2022, roughly 300,000 units were shipped. That number swelled to over 3.7 million data-center GPUs in 2023, and by the close of 2024, an additional 4 million+ units (H100, H200, and initial Blackwell chips) were deployed. As we move through 2025, with the ramp-up of the B200 and GB300 architectures, total high-end Nvidia GPU deployments have likely surpassed the 10-million-unit mark. The primary owners of this silicon estate are the “usual suspects”: Microsoft, Meta, Google, xAI, AWS, and specialized clouds like CoreWeave.

The 18-Month Half-Life

While cloud providers may try to stretch the accounting life of these assets to six years on their balance sheets, the market value of the hardware tells a different story. Data suggests that high-end GPUs lose approximately 50% of their market value within 18 to 24 months of release.

If we apply this “18-month cliff” to the first major wave of H100s sold in late 2022, the value reset began in earnest by Q2 2024. This means that right now, in late 2025, hundreds of thousands of GPUs are hitting a massive depreciation wall every single quarter.

A Consumer Bonanza

This rapid value collapse creates two very positive realities for the ecosystem:

- For Inference Clouds: Companies like Together AI, Baseten, and Fireworks AI often lease capacity from Neoclouds to run their software layers. As the underlying cost of “renting the rack” drops, these providers can offer far more competitive services, slashing the barrier to entry for developers.

- For the AI Consumer: It is a total bonanza. Unlike hyperscalers (AWS/Google), who can repurpose older GPUs for internal workloads like ad-ranking or search, specialized clouds must compete on price to keep their clusters utilized.

As we enter this first great “Depreciation Wave,” the millions of H100s shipped during the 2023–2024 gold rush are moving into their “secondary economic life.” For the provider, once the initial debt is serviced, every dollar of rental income becomes pure margin. For the customer, this creates a pricing floor that finally makes world-class AI infrastructure affordable for startups and SMBs with limited budgets.

The Inference Revolution: Why Cheap Compute Wins

As GPU costs drop, the cost of the “token” follows suit. We are already seeing the impact:

- Token Price Collapse: In early 2024, 1M tokens on a frontier-class model could cost $15–$20. By late 2025, optimized providers using “paid-off” H100 clusters have driven that price toward $0.50–$2.00 per million tokens for comparable performance.

- The Rise of SMB AI: When compute is expensive, AI is a luxury for funded startups. When it collapses, AI becomes a utility for SMBs. A local law firm or a mid-sized retailer can suddenly afford to run dedicated, fine-tuned models 24/7 without a six-figure monthly burn.

The Road to $0.50 per Hour

In 2023, an H100 was a scarce commodity, commanding $8.00/hr. Today, in late 2025, the market has settled into the $2.00–$3.00/hr range for on-demand access, with some marketplaces already dipping below $1.50/hr.

But the real floor is even lower. If we project the current trajectory, accelerated by the massive supply of Blackwell (GB200/GB300) and the looming “Rubin” architecture in 2026, we are heading toward a world of commodity compute. Prediction: Within the next 24 to 36 months, as the initial 5–6 million H100s reach the end of their primary financing cycles, we might see spot instances for these chips drop to $0.50 per hour or lower. At $0.50/hr, the “compute barrier” effectively vanishes. A GPU transforms from a capital-heavy asset into a disposable resource, much like basic web hosting in the early 2010s.

Conclusion: The Installed Base is the Prize

The tech industry often obsesses over the “newest and fastest,” but for the broader economy, the installed base of slightly older GPUs is more important.

A world with 10 million “depreciated” but highly capable GPUs is a world where:

- Startups spend their seed rounds on talent, not cloud bills.

- Enterprises deploy agents that can “think” for minutes, not seconds, because the cost of reasoning is no longer prohibitive.

- Innovation shifts from “who has the most GPUs” to “who has the best implementation.”

While the Neoclouds may struggle with the accounting reality of rapid depreciation, the rest of the world is about to enjoy a golden age of affordable intelligence. The GPU “bubble” may be deflating for investors, but for the consumers of AI, the party is just getting started.